Microsoft Declares Silicon Independence: AI Chip Revolution to Break Free from Nvidia and AMD

Why Microsoft Is Betting Big on Its Own AI Chips

Microsoft’s Chief Technology Officer Kevin Scott recently revealed at Italian Tech Week that the company plans to prioritize its own custom silicon for powering AI workloads in its data centers. While Microsoft currently uses a mix of Nvidia GPUs, AMD processors, and its own chips, the long-term vision is build an end-to-end system designed and optimized entirely in-house.

This announcement comes amid an unprecedented surge in demand for AI compute capacity. Scott described the current situation as a “massive crunch,” noting that even Microsoft’s most ambitious forecasts have proven insufficient to meet the explosive growth of AI applications.

Maia and Cobalt

Microsoft isn’t starting from scratch. In 2023, the company launched two proprietary chips:

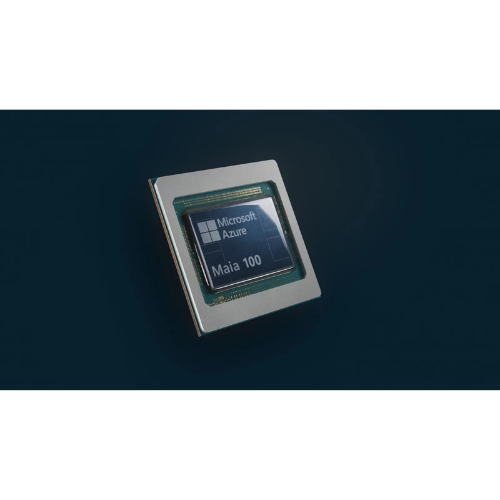

- Azure Maia AI Accelerator: Designed specifically for AI workloads, this chip aims to rival Nvidia’s dominance in machine learning and deep learning tasks.

- Cobalt CPU: A general-purpose processor optimized for cloud infrastructure, offering improved performance and energy efficiency.

These chips are already being deployed across Microsoft’s data centers, and the company is reportedly working on next-generation versions with enhanced capabilities.

Why Microsoft Wants to Control Its Infrastructure

The decision to lean into proprietary chips is part of a broader strategy to gain full control over the design and operation of its data centers. As Scott explained, it’s not just about the chips—it’s about designing the entire system, from silicon to cooling to networking.

This level of control allows Microsoft to:

- Optimize performance for specific AI workloads.

- Reduce costs.

- Improve scalability in response to surging demand.

- Enhance security by managing the full hardware stack.

In essence, Microsoft is building a vertically integrated AI infrastructure, similar to what Apple has done with its M-series chips for consumer devices.

Microsoft vs. Nvidia, AMD, Google, and Amazon

Microsoft’s move mirrors similar strategies by other tech giants:

- Google has developed its Tensor Processing Units (TPUs) for AI.

- Amazon uses its Graviton and Trainium chips to power AWS.

- Meta is investing in custom silicon to support its metaverse ambitions.

However, Microsoft’s scale and enterprise reach give it a unique advantage. With Azure serving millions of customers globally, the ability to deploy proprietary chips across its cloud infrastructure could redefine the economics of AI computing.

Still, Nvidia remains the dominant force in AI hardware, and AMD continues to innovate in high-performance computing. Microsoft’s challenge will be to match or exceed the performance benchmarks set by these incumbents while maintaining cost efficiency.

Implications for the AI Industry

Microsoft’s silicon independence has far-reaching implications:

1. Supply Chain

Microsoft can better navigate global semiconductor shortages and geopolitical tensions.

2. Innovation

Custom chips allow for faster iteration and optimization, enabling breakthroughs in AI model training and inference.

3. Disruption

If successful, Microsoft could disrupt the GPU market, challenging Nvidia’s monopoly and reshaping pricing dynamics.

4. Differentiation

Azure could offer unique performance advantages over AWS and Google Cloud, attracting enterprise clients with specialized AI needs.

Microsoft’s declaration of silicon independence marks a turning point in the evolution of AI infrastructure. By investing in proprietary chips and designing full-stack systems, the company is positioning itself to lead the next wave of innovation in cloud computing.